Advanced Concepts in Video Servoing

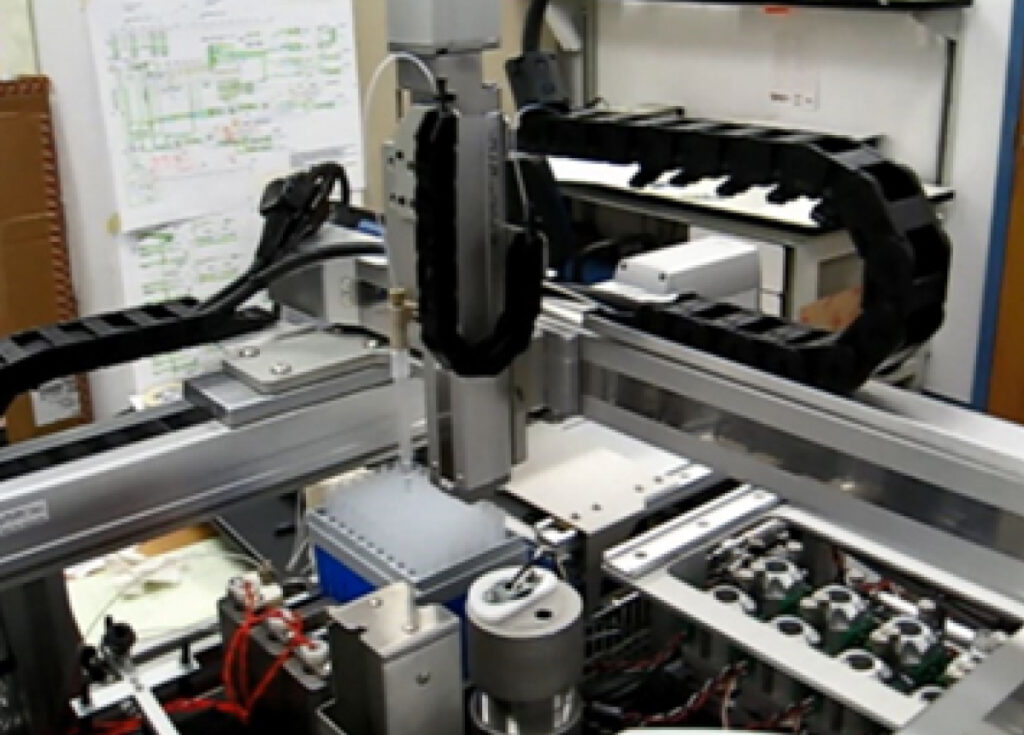

Visual servoing is a technique in robotics that uses visual feedback to control the motion of a robot. This process involves capturing images from cameras (or other vision sensors), processing these images to extract useful information, and using this information to guide the robot’s actions in real-time. Here are some advanced concepts in visual servoing: